Introduction

eLearning is the future of learning, with the global eLearning industry estimated at USD 399.8 billion in 2022, with an anticipated growth of 14% CAGR between 2023 and 2032. The COVID-19 pandemic brought about a tectonic change in how colleges and universities functioned worldwide, further propelling the need for digital education and online exams. Four years hence, the post-pandemic reality is that the need for safe, well-conducted online examinations is here to stay.

Online remote proctoring, or virtual proctoring, as it is also known, helps monitor candidates taking online assessments to ensure the fastidiousness and authenticity of the test taker. This is done by providing a monitoring entity that evaluates all crucial movements during the test. However, while these tools continue to evolve, careful consideration must be given to the new challenges and stressors these may bring for students. With most students already anxious due to the exam, the added pressure of remote proctoring can exacerbate these feelings. By implementing thoughtful practices, we can work to mitigate these challenges and create a positive and supportive exam-taking environment.

7 Ways to Improve Student Experience and Create a Stress-free Environment

Selecting the Right Technology and Maintaining Inclusivity

One key component of a stress-free remote proctoring experience is the selection of appropriate technology that is secure and accessible across different devices and operating systems. Additionally, it should have an intuitive interface that helps students navigate with ease and ensures equity and accessibility for everyone. Is the tool inclusive of students with varying needs? If not, it is best to get back to the design board and figure out how to make it so.

Good remote-proctoring tech should have accommodations for students with disabilities and special needs. This includes assistive navigation, multi-language support, audio instructions, and adaptive devices to help level the playing field and reduce stress. Cloud-based proctoring solutions have also emerged, integrating with various LMSs, allowing scalability and flexibility. These platforms help accommodate a large volume of test-takers simultaneously and help provide a streamlined experience for educational institutions and students.

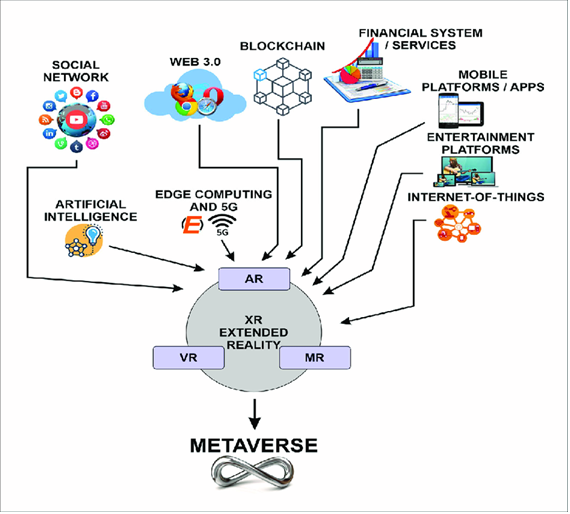

Enhanced AI-based Proctoring

Recent advancements in AI and machine learning have significantly enhanced remote proctoring, ensuring security and convenience. These technologies enable unobtrusive, real-time monitoring of students taking an exam by analyzing various biometric and behavioral data points. They detect unusual behavior or potential cheating by analyzing patterns in movement, eye direction, and other biometric data without constant human oversight. For students who might feel pressured by the idea of being observed by a human proctor, the discreetness of AI proctoring creates a more relaxed and less intimidating testing environment.

AI in proctoring can help minimize disruptions and reduce the stress associated with technical failures by quickly diagnosing and providing solutions.

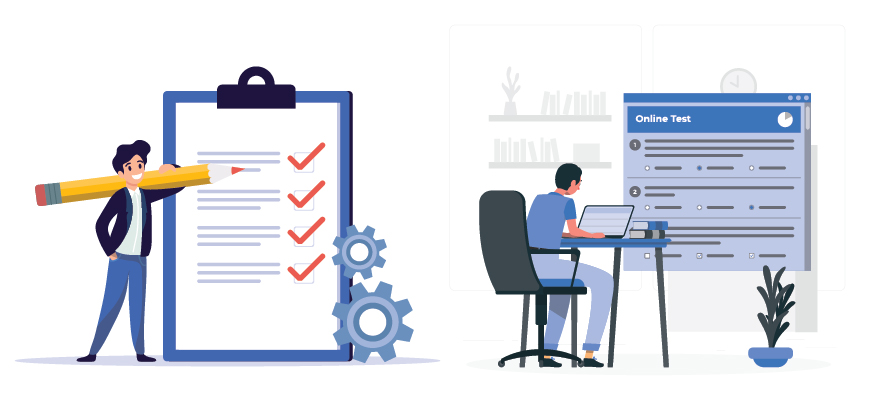

Familiarize Students with the interface

Clear instructions and practice sessions can diminish any concerns students may have about navigating the technology. Practice tests help students familiarize themselves with the remote proctoring software and reduce anxiety about the testing process. Additionally, they help demonstrate the types of behaviors that are expected during the exam, such as not exiting the frame at any time and avoiding distractions.

Reduce anxiety levels by clearly communicating the purpose of remote proctoring to the students, establishing the expectations, and distinguishing between desired and undesired behaviors during the exam.

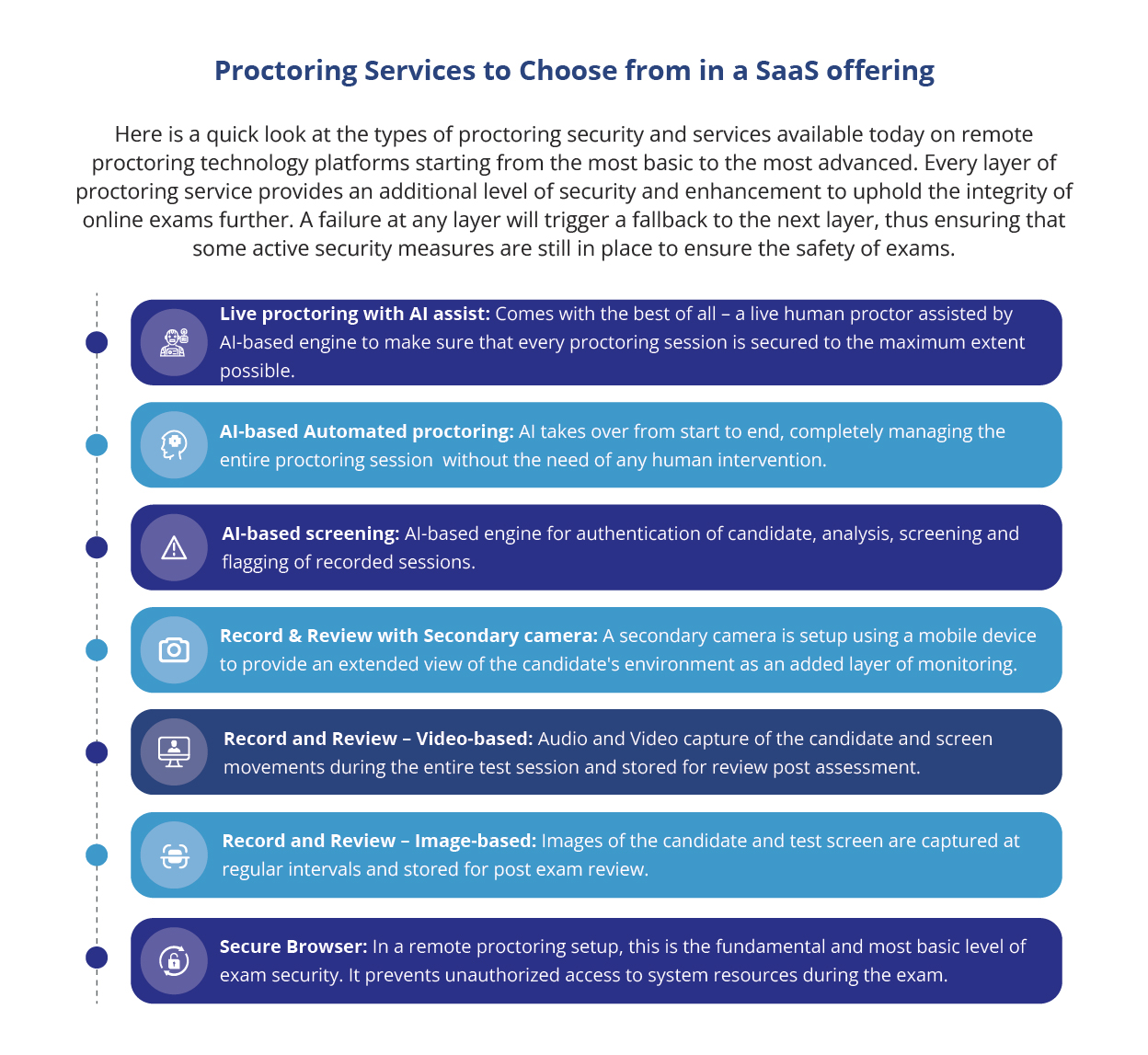

Using Secure Browsers

Using a secure, locked-down web browser environment to conduct exams limits access to other websites and restricted supplementary resources. This promotes a safe exam environment and reduces the degree of proctor scrutiny. It also alleviates the students’ fear of wrongly being accused of malpractice.

Protect the Integrity of Student Data

One of the most common concerns students have with proctoring tools is providing device access and the fear that the tool may collect confidential data without their knowledge.

It is best to debunk these notions at the very beginning – educate students on the extent of access and impact of the remote-proctoring tool on their devices. Be transparent about what data is instrumental for the authentication and proctoring process and seek the student’s consent at each step. Provide clarity on how the tool is formulated to collect their data, the duration for which data is stored, and whom to contact if they have any privacy-related concerns. Providing students with options for anonymous feedback and reporting any incidents of misconduct can help maintain trust and transparency in the proctoring process. Additionally, we must emphasize developing systems around the proctoring tools to keep the collected data safe and secure.

Moreover, network security and data encryption advancements have addressed privacy and data breach concerns. Enhanced encryption protocols ensure that sensitive data is securely transmitted and stored, protecting both the institutions and the test-takers.

Use Hybrid proctoring systems

As potent as AI algorithms are, coupling them with the human touch can further help students trust online proctoring applications. Hybrid proctoring systems that combine AI discrepancy-detection tools with experienced human proctors have the potential to provide the best results.

AI enhances the fairness and consistency of remote proctoring by eliminating potential biases that human proctors might unconsciously introduce. At the same time, students feel at ease if they can contact a proctor via chat or other means of communication while taking an exam. A hybrid proctoring system offers the best of both worlds.

Real-time support while taking the exam

There is a natural tendency for students to be stressed out during an exam. In addition, the feeling of being monitored by the remote proctoring tool leads to navigational issues and technical difficulties. In these scenarios, having a real-time support team on hand can significantly help improve the student experience. Support teams must be experienced and be able to quickly resolve technical difficulties to facilitate the hassle-free running of the exam.

Conclusion

In conclusion, creating a stress-free remote proctoring experience for students requires a holistic approach that addresses communication, training, and technology strategies. Select appropriate technology, clearly communicate Dos and Don’ts to the students, provide practice runs to familiarize them with the interface, and prioritize equity and privacy. Ultimately, educators can help students feel more confident and trusting of the remote-proctoring experience by fostering a supportive and inclusive approach.

Excelsoft’s remote proctoring tool, EasyProctor, helps monitor and record candidate movements, mouse pointer activity, and screen activity during a test. EasyProctor supports proctoring in Live, Record, and Review mode. It works seamlessly with secure, lockdown browsers to increase security and, in turn, decrease stress for the students.